Act As If You Are a Curator: An AI-Generated Exhibition

PDF: Dickey, Act As If You Are a Curator

Curated by: ChatGPT, with support from Julia McHugh, Julianne Miao, Mark Olson, and Marshall N. Price. Irma Lopez, Alveena Nadim, Maddie Rubin and David Sardá provided research support.

Exhibition schedule: Nasher Museum of Art, Duke University, Durham, NC, September 9, 2023–February 18, 2024

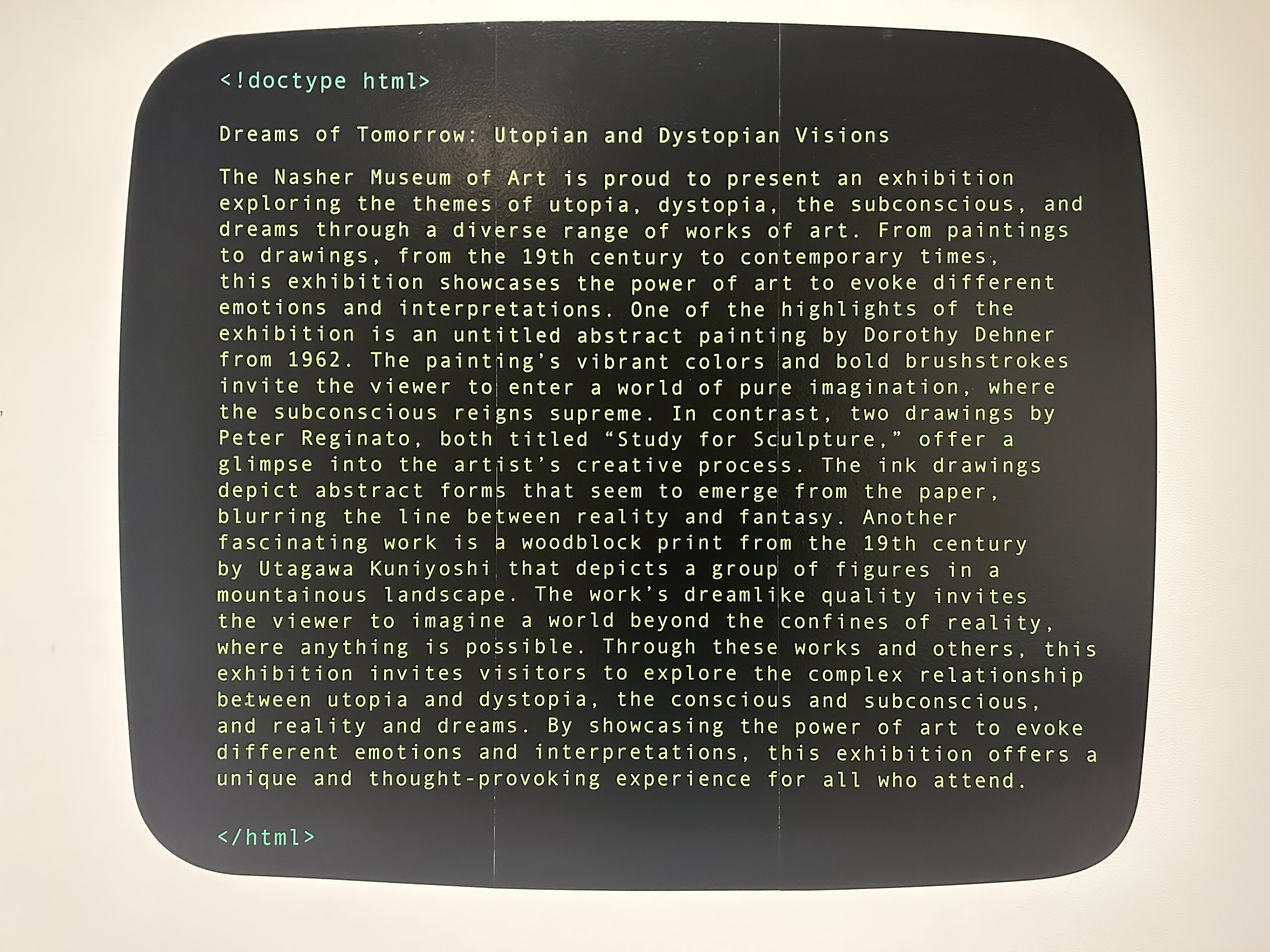

The exhibition Act As If You Are a Curator: An AI-Generated Exhibition at the Nasher Museum of Art at Duke University aimed to surface potential relations and frictions between cultural institutions and emergent artificial intelligence (AI) tools by prompting ChatGPT to curate an exhibition. For the exhibition, which comprised twenty-one objects, museum staff collaborated with Duke faculty and students to extract publicly accessible information pertaining to fourteen thousand objects from the museum’s collection database, to feed this dataset to a custom-trained ChatGPT interface, and to create a set of prompts to instruct the model in developing the exhibition theme, selecting and arranging objects, and writing interpretive text. Thus, Act As If You Are a Curator functions as a conceptual and critical sleeve for an exhibition-within-the-exhibition that ChatGPT titled Dreams of Tomorrow: Utopian and Dystopian Visions (fig. 1). This AI-generated exhibition purported to explore “themes of utopia, dystopia, the subconscious, and dreams through a diverse range of works of art.”

AI as a research field has existed in various forms since the 1940s; art exhibitions exploring the intersections and interactions between human and machine intelligence and creativity also have a lengthy history.1 In its current definition, AI encompasses a range of statistical language and image models that draw on (or are “trained” from) vast datasets of textual or graphical information to generate novel texts or images in response to human-created prompts and in accordance with the algorithms (computational procedures) that govern them. Large Language Models (LLMs), including ChatGPT from the company OpenAI, create complex maps of many thousands of statistical relationships between terms within textual data; queries to ChatGPT can delineate style as well as content, asking the model to draw on the most statistically relevant information in its corpus and to reorganize it in accordance with a given form. ChatGPT’s API (application programming interface) is partially open, which allows for customization of its language-processing capabilities within other applications, though OpenAI does not reveal its source code.

It is vital to ask where Act As If You Are a Curator falls on the spectrum between caving to narratives of the inevitability of AI’s full assimilation within social life and incisively analyzing the layered technological and cultural systems required for it to function. The public launch of ChatGPT in late 2022 has been accompanied by breathless coverage of the sophistication of its language-processing capabilities (as well as those of language and image models from other companies) and its ability to integrate new information, affirming the utopian promise of vastly accelerated scientific and technical knowledge production. Its dystopian potentials, including deleterious effects on labor, education, and politics (as well as the seemingly science-fictional prospect of machine sentience) are also embedded in this discourse. This binary of utopia and dystopia, while grounded in AI research, also plays into the imperatives of technology marketing. Simultaneous narratives of unprecedented creation and destruction claim attention and amass name recognition in an increasingly competitive market, distracting from nuanced public conversation and oversight of the affordances and drawbacks of a field that requires complex infrastructure and vast quantities of data, energy, and capital. With the aim of giving ChatGPT “curatorial agency,” the Nasher has created something of a diagnostic test for visitors’ feelings about the current status of AI itself.2 While this show, produced far more quickly, flexibly, and cheaply than a human-curated exhibition, provides an experimental public launching point for conversations about AI, future interventions might grapple with the specific logics of these models and the often-obscured aims of the institutions and interests behind them. Yet, by involving students in the experiment and taking part in practical and ethical conversations around using and customizing such platforms, the Nasher’s project suggests how related work can perform an increasingly necessary pedagogical function.

As an exhibition, it is a provocative thought experiment: curation as a test of textual patternmaking as opposed to art-historical knowledge. The common interface for the version of ChatGPT used in the exhibition (v. 3.5) uses a dataset of select information on the Web published before late 2021; thus, it could not be prompted to pull from a single source or website. For this reason, preparatory experiments in querying ChatGPT returned limited results directly relevant to the Nasher’s collection. Nasher staff reached out to Mark Olson, associate professor in Art, Art History, & Visual Studies at Duke, to tailor the information ChatGPT could draw on for its curation. Olson, along with an interdisciplinary group of undergraduate students involved with Duke’s Digital Art History and Visual Culture Research Lab, did this by customizing its interface, a project that took a few months and was largely put together with open-source tools, aside from ChatGPT’s API. Olson explains, “As a pedagogical exercise, I wanted my students to be thinking about how these spaces are quite fraught. We know more and more about how these models have relied on scraping data and not respecting attribution and licensing and all these kinds of things.”3 They built a data-scraping tool using Streamlit, a framework for creating web applications using the coding language Python, to capture publicly available textual data from the Nasher’s online collection: metadata about each artwork from the collections database. Thus, rather than ChatGPT drawing on graphical and textual information about the entire collection, it used just textual metadata about the portion of the collection accessible online, as well as its general training data from Web sources.4 It was important for all involved to use only information that is currently public, as it remains unclear how much developer data OpenAI keeps for use of its API—as Olson said, he and the students did not want to “massively feed the machine.”5 This is an essential point: a central question institutions need to consider when using AI platforms is whether access to data will effectively be traded for use of proprietary algorithms. Such concerns emerged with Olson and his students throughout this process, where questions were not just technical but ethical. The extracted textual data from the Nasher was then passed through ChatGPT’s embeddings API, a model that maps relationships among terms, rendering the information as a set of statistical probabilities, output as a database indexing these vectors. This process essentially brought “the Nasher data into the language world-space of ChatGPT.”6 Using LangChain, a set of tools used to bridge different language models, Olson triangulated a chat interface with this vector database and ChatGPT. After this, Olson notes, the curatorial work consisted of prompt creation.

To develop the exhibition concept, the Nasher curatorial team asked the custom ChatGPT interface, “What themes or topics would be most appealing for an exhibition at a university art museum?” Although the model “had many suggestions,” it “consistently returned to” the themes of “utopia, dystopia, dreams, and the subconscious.”7 Using those themes as search terms, the team then prompted ChatGPT to build a checklist of objects for the six-hundred-square-foot gallery, write introductory text and wall labels, and suggest how the works should be sequenced and grouped within the space. Taking place over a few weeks, these prompting sessions were recorded; a small selection of transcribed excerpts are available through the Nasher’s website.

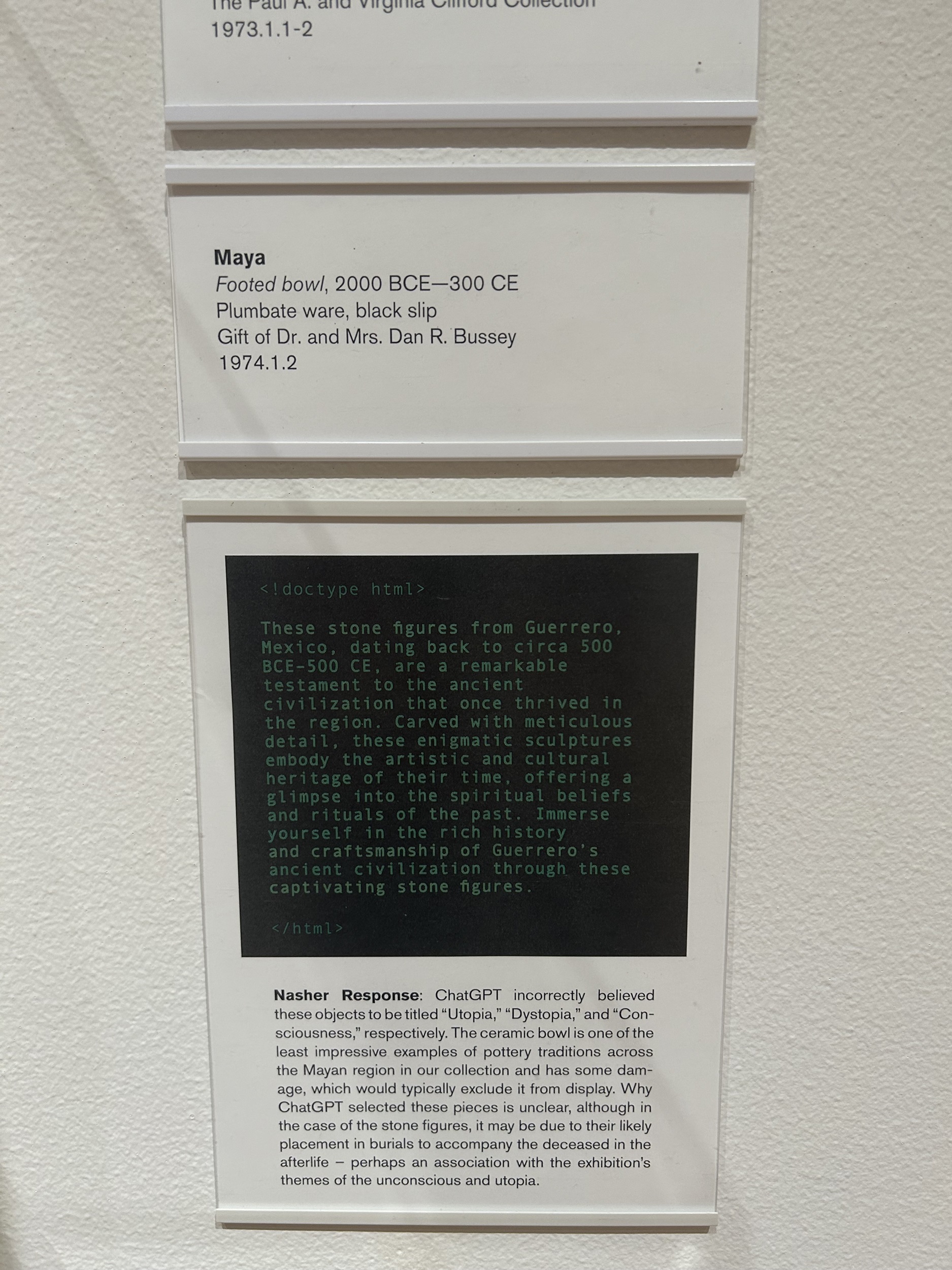

The process yielded surprisingly legible results, with thematically appropriate, if eclectic, selections; the arrangement made formal sense despite ChatGPT’s use of only textual information. Often, the titles of the artworks (with words like “dream” and “consciousness”) help to explain why ChatGPT matched them with the themes. In a few cases, ChatGPT made up titles for works and hallucinated interpretive information.8 On the lefthand side of the gallery, the abstract figuration of Roy Ahlgren’s screenprint The Three Graces VI harmonized with that of Tunji Adeniyi-Jones’s aquatint Astral Reflections and Leonid Lamm’s drawing Dream, as well as with the more representational human figures in Salvador Dalí’s Obsession of the Heart (The World) and Raphael Soyer’s Dreams (fig. 2). Works of geometric abstraction were arranged near one another in the center of the gallery, leading to an architectural motif on the right comprising the anonymous twentieth-century painting Cityscape and a Piranesi etching titled View of the Remains of the Dining Room of Nero’s Golden House; oddly enough, given the “utopia” and “dystopia” elements of the show’s theme, these were the only two works explicitly representing places. Next to the etching were a set of three, inexplicably chosen Mayan sculptures; the label annotation revealed that ChatGPT mistitled them “Utopia,” “Dystopia,” and “Consciousness” (figs. 3, 4). On the far right, ChatGPT placed images with authoritative, otherworldly figures at their core: Nicolas Monro’s Cosmic Consciousness, Juliana Seraphim’s Asturias #2, and Salvador Dalí’s The Mystery of Sleep (The Hermit).

Through annotations placed below ChatGPT’s more egregiously hallucinatory wall texts, visitors were guided to consider rationales for the model’s decisions and to attend to the vacuousness of its vocabulary. The selected corrections of ChatGPT’s labels focus on errors made by the system because of its training data, helpfully connecting the model’s assumptions to possible issues in the works’ metadata, as well as to problems inherent in equating knowledge about a topic with statistical probabilities in language. These errors often took the form of retitling objects, mislabeling media, and including information gleaned from non-museum training data and inaccurately paired with exhibition objects. The tone of ChatGPT’s wall labels imitates a version of “curatorspeak”: effusive, vague, and with stereotypical but contextually meaningless terms, like “intricate brushwork” and “vivid imagery.” The critique of these labels in Act As If You Are a Curator is useful not for communicating any productive insights about the artworks to viewers but instead as a spur to reflect on the overwhelmingly formulaic ways that most art is described. ChatGPT does not actually “see” the art but instead reorganizes fragmented information about it according to a complex set of statistical probabilities of the English language. What is unclear—and what the show does not address—is whether LLMs, in their current form, might reinforce such rhetoric, fabricating more constrictive dimensions for articulating what is perceptible.

Piranesi’s classicism juxtaposed with misidentified Mayan sculptures might provoke an attempt at explaining the works’ adjacency. Has ChatGPT, for example, found a relationship between historical memory pertaining to past civilizations and ideas of utopia and dystopia? Well, no. Given the curator, such interpretation is emphatically a function of the visitor’s own patternmaking tendencies. That is, when we take a collection of objects and are told that they have X, Y, and Z in common with one another, it is very likely the case that we will see X, Y, and Z in them. Thus, experiments like this can be a window into the potentially destabilizing power of LLMs to capitalize on our hallucinatory functions while obscuring their own internal patternmaking mechanisms. Anna Munster, following William James’s distinction between perception and the perceptible—the idea that the “perceptible” arises after the action of perception and then is recognized, or matched, to what is already known⎯has argued that in the algorithmic generation of patterns within large datasets, patterns are “made perceptible” rather than perception occurring. Thus, “a pattern seen in data is an example of recognition, albeit machine recognition . . . but the parameters, relations and arrangements that organize and make sense of data are not visualized.”9 These nonvisualized processes have become imperceptible. Because of this, a future avenue for cultural institutions’ engagements with AI might instead focus on this “imperceptible.” Much of how these current tools work is imperceptible to the typical user.

On the other hand, as Nasher curatorial assistant Juliane Miao notes, experiments like Act As If You Are a Curator can allow an alternate nonhuman perspective of the collection and of cataloguing practices that might reveal things humans overlook—for instance, works that have rarely (or never) been on display.10 This sense that AI might offer something more to culture work than just labor saving informs a number of initiatives within museums and archives exploring uses for AI in collections cataloguing and retrieval, object interpretation, and visitor engagement.11 There are simultaneous efforts to weigh the numerous intellectual and ethical risks of such emergent tools, acknowledging the biases and positionalities of tool creators, the large carbon footprint of some generative AI software, and potential adverse effects on labor.12 The degree of arbitrariness in both human and AI curation is worth exploring, yet even as acknowledgment and interrogation of the political factors that inform collection and curation change museums and other cultural institutions for the better, the exhibition did not approach ChatGPT’s decision-making processes in a way that reflects this.

Ben Lerner’s recent short story “The Hofman Wobble: Wikipedia and the Problem of Historical Memory” ends with a now-familiar gesture that is evident in the Nasher show, among other examples: a prompt to ChatGPT and the model’s response to that prompt.13 Lerner’s own final lines meditate ambivalently on the narrowing possibilities for political efficacy between increasingly scaled-up techniques for the externalization of human knowledge and the affective flattening and cognitive fragmentation resulting from the attention economy of the first two decades of the twenty-first century. In contrast to Lerner’s refusal to synthesize and resolve competing interpretations of this dilemma, ChatGPT’s conclusion to his story insists that the “fundamental nature of information” is “the essence of knowledge itself.” Forgoing nuance for vapidity, the model reveals its own programming, insisting that information is “not about dominating or manipulating, but about empowering and illuminating.” This is an instrumental perspective from the tool, designed to alleviate troublesome doubts about the ability of “the collective minds of society” to “discern truth from falsehood.” In this way, Lerner’s use of the gesture draws attention to ChatGPT’s encoded ideology, which proclaims an “unstoppable force of data” beyond “any individual’s control.” The function of this ideology, like all ideologies, is to produce particular subjects: in this instance, ones comfortable with ditching agency in information flows while spiritually grounding themselves in the certainty of fact. One of the few responsible rationales for the current use of this familiar gesture—perhaps its most important use in art contexts—is interrogating and complicating the story that ChatGPT (and OpenAI) tells about its own inevitability.

Thus, an essential part of Act As If You Are a Curator concerns the creation of atypical users attuned to how LLMs function, what distinguishes their modes of patternmaking, the ethical issues that arise from training AI on large datasets, and the ideological functions of software. This educational element of manipulating what OpenAI makes manipulable is arguably the most successful element of the project and what distinguishes it from the familiar gesture of testing ChatGPT’s creative capacities. The process of involving students in this application of ChatGPT to museum curation can be a constructive step in developing critical understandings of the tool’s affordances, drawbacks, and unacceptable breaches of the public trust. But future experiments with AI-based curation should more publicly document and open up instances where datasets, programming decisions, and marketing narratives function as a means of obscuring the limits and incompleteness of AI, sharing with museum visitors the process of deciding which platforms (and companies) cultural institutions choose to use and why, highlighting points of human decision, conversation, and compromise. By facilitating public inquisitiveness about the possible uses of LLMs and what constraints can be set for them, institutions can more assiduously probe the “imperceptible” within AI models, complicating narratives of their inevitability and of the utopia/dystopia binary.

Cite this article: Erin Dickey, “Act As If You Are a Curator: An AI-Generated Exhibition,” Panorama: Journal of the Association of Historians of American Art 10, no. 1 (Spring 2024), https://doi.org/10.24926/24716839.18990.

Notes

- First conceived by Warren McCullough and Walter Pitts in 1943, the artificial neuron, a mathematical function serving as a model of human biological neurons, was already a topic of conversation at the Macy Conference on Cybernetics in 1946. One of the key assumptions underlying early artificial intelligence research was that cognition is rule-bound; thought was represented as essentially the manipulation of algorithms. See N. Katherine Hayles, “Contesting for the Body of Information: The Macy Conferences on Cybernetics (1946 and 1953),” in Systems, ed. Edward Shanken, Documents of Contemporary Art (London: Whitechapel Gallery, 2015), 41. Among a range of other art and technology exhibitions and initiatives from the 1960s, Jasia Reichardt’s Cybernetic Serendipity (1968) featured machines designed to automatically generate text, music, and drawings; Jasia Reichardt, ed., Cybernetic Serendipity: The Computer and the Arts (London: Studio International, 1968). ↵

- Julianne Miao, interview with the author, December 20, 2023. ↵

- Mark Olson, interview with the author, January 17, 2024. ↵

- Olson notes that the “temperature” balance between the custom dataset and ChatGPT’s general dataset could be calibrated (that is, the team could choose to rely less on the general data and more on custom data, or vice versa). Olson, interview. ↵

- Olson, interview. ↵

- Olson, interview. ↵

- “Behind the Scenes of an AI-generated Exhibition,” Nasher Museum of Art, September 8, 2023, https://nasher.duke.edu/stories/behind-the-scenes-of-an-ai-generated-exhibition. ↵

- In AI research, “hallucination” refers to inaccurate or nonsense responses generated by the model. ↵

- Anna Munster, An Aesthesia of Networks: Conjunctive Experience in Art and Technology (Cambridge, MA: MIT Press, 2013), 82. ↵

- Miao, interview. ↵

- Among others, see the Frick Collection’s project with Stanford and Cornell Universities to enhance discoverability of their photo archive and the UK National Archives’ experiments with automated processes for digital records selection. “AI and the Digital Photoarchive,” The Frick Collection Library, accessed December 20, 2023, https://www.frick.org/library/digitalarthistory/projects; “Using AI for Digital Selection in Government,” National Archives (UK), accessed December 20, 2023, https://www.nationalarchives.gov.uk/information-management/manage-information/preserving-digital-records/research-collaboration/using-ai-for-digital-selection-in-government/. Uses of ChatGPT and other LLMs for art history chatbots are also under consideration at a number of museums, including the National Gallery of Art. ↵

- Instructive is the “AI Values Statement,” Smithsonian Institution, accessed December 20, 2023, https://datascience.si.edu/ai-values-statement. Thank you to Jennifer Snyder, oral history archivist at the Archives of American Art, Smithsonian Institution, for directing me to this resource. ↵

- Ben Lerner, “The Hofman Wobble: Wikipedia and the Problem of Historical Memory,” Harper’s Magazine (December 2023), https://harpers.org/archive/2023/12/the-hofmann-wobble-wikipedia-and-the-problem-of-historical-memory. ↵

About the Author(s): Erin Dickey is a Chester Dale Predoctoral Fellow, Center for Advanced Study in the Visual Arts, National Gallery of Art, and PhD candidate at University of North Carolina–Chapel Hill.